Fitting a Lattice to Data

2024 Sep 30

Let's say we have a bunch of datapoints in

ℝ that are expected to lie on some lattice, with some noise in the measured positions. We'd like to fit a lattice to these points that hopefully matches the ground truth lattice well. Since just by choosing a very fine lattice we can get an arbitrarily small error without doing anything interesting, there also needs to be some penalty on excessively fine lattices. This is a bit of a strange problem, and an algorithm for it will be presented here.

method

Since this is a lattice problem, the first question to jump to mind should be if we can use the

LLL algorithm in some way.

One application of the LLL algorithm is to find integer relations between a given set of real numbers.

[wikipedia] A matrix is formed with those real numbers (scaled up by some factor

ζ) making up the bottom row, and an identity matrix sitting on top. The algorithm tries to make the basis vectors (the column vectors of the matrix) short, but it can only do so by making integer combinations of the basis vectors. By trying to make the bottom entry of each basis vector small, the algorithm is able to find an integer combination of real numbers that gives 0 (if one exists).

But there's no reason the bottom row has to just be real numbers. We can put in vectors instead, filling up several rows with their entries. The concept should work just the same, and now instead of combining real numbers, we're combining vectors.

For example, say we have 4 datapoints in three dimensional space,

x = (x, y, z). The we'd use the following matrix as input to the LLL algorithm.

| 1 | | | |

| | 1 | | |

| | | 1 | |

| | | | 1 |

| ζx | ζx | ζx | ζx |

| ζy | ζy | ζy | ζy |

| ζz | ζz | ζz | ζz |

Here, ζ is a tunable parameter. The larger the value of ζ, the more significant any errors in the lower 3 rows will be. So fits with a large ζ value will be more focused on having a close match to the given datapoints. On the other hand, if the value of ζ is small, then the significance of the upper 4 rows is relatively more important, which means the fit will try and interpret the datapoints as small integer multiples of the basis lattice vectors.

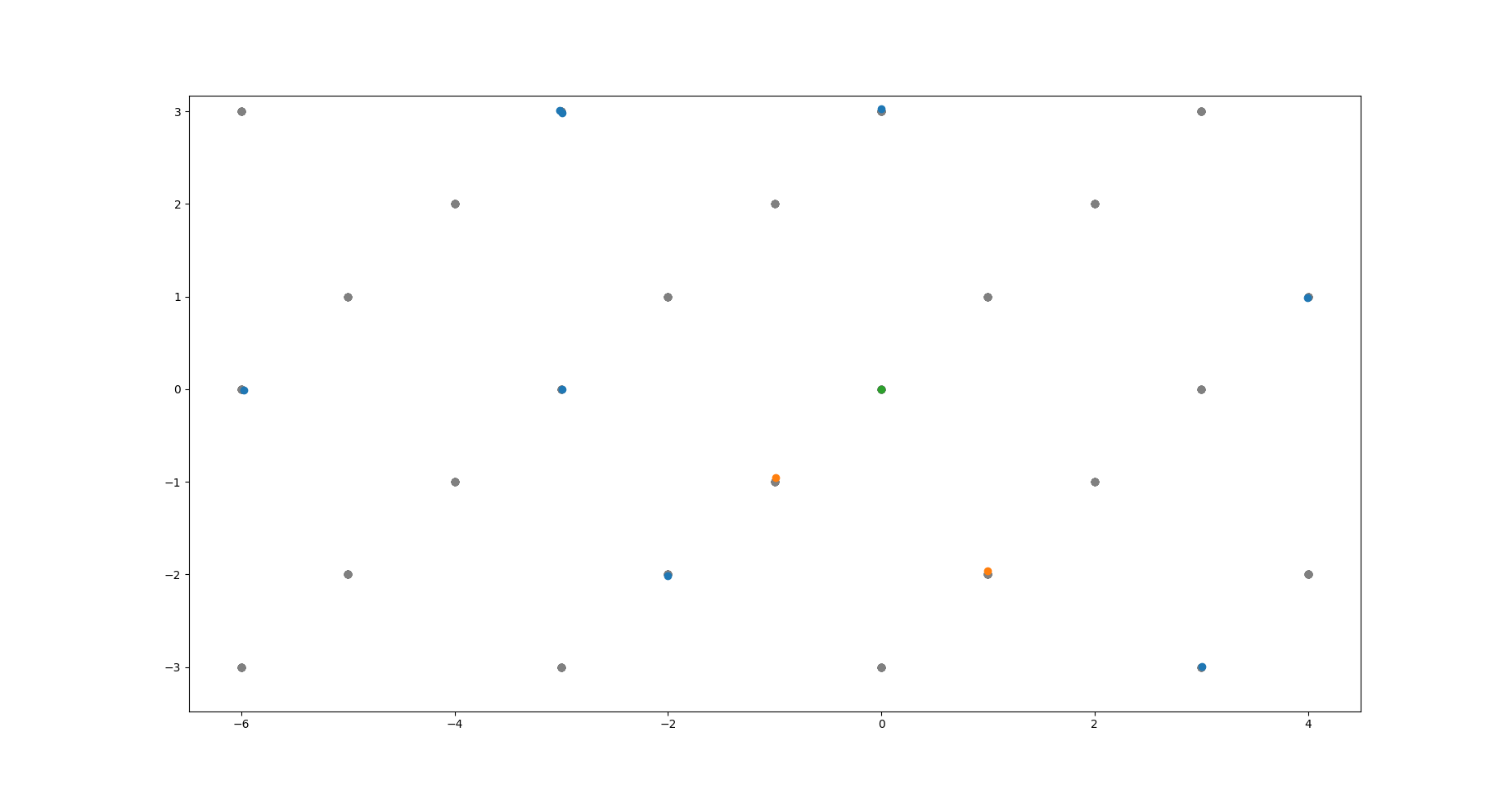

The above image shows the algorithm at work. Green dot is the origin. Grey dots are the underlying lattice (ground truth). Blue dots are the noisy data points the algorithm takes as input. Yellow dots are the lattice basis vectors returned by the algorithm.

code link

Run lattice_fit.py to get a quick demo.

API: Import lattice_fit and then call lattice_fit(dataset, zeta) where dataset is a 2d numpy array. First index into the dataset selects the datapoint, and the second selects a coordinate of that datapoint. zeta is just a float, whose effect was described above. The result will be an array of basis vectors, sorted from longest to shortest. These will approach zero length at some point, and it's your responsibility as the caller to cut off the list there. (Or perhaps you already know the size of the lattice basis.)

caveats

Admittedly, due to the large (though still polynomial) time complexity of the LLL algorithm, this method scales poorly with the number of data points. The best suggestion I have so far here is just to run the algorithm on manageable subsets of the data, filter out the near-zero vectors, and then run the algorithm again on all the basis vectors found this way.

...

I originally left this as a

stack overflow answer that I came across when initially searching for a solution to this problem.