Cumulants

2023 Jul 29

Introduction

This article is about

cumulants. We'll look at the basic property cumulants have that makes them so useful (a generalization of the fact you can add together the variances of independent random variables to get the variance of their sum). Then we'll look at the implications for computing Gaussian integrals.

Cumulants add for independent variables

Suppose that X is a random variable in ℝ...

We can compute the moments of X, which are the expectation values of its powers:

There's a clever trick for computing these values. Say that the probability density for X is ρ(x). Then we define the moment generating function for X to be:

Then we can compute moments like so:

This is because we can expand the definition of m in terms of moments as a power series:

Now consider

⟨X⟩. This is the expected square of

X, however the variance is a slightly different quantity, namely:

Var(X) = ⟨X⟩ - ⟨X⟩ This is much more useful for a variety of applications. Why is this so? Consider what happens when we add together two independent random variables,

X, Y. Then:

Var(X + Y) = ⟨(X+Y)⟩ - ⟨X+Y⟩ = ⟨X⟩ + 2⟨XY⟩ + ⟨Y⟩ - ⟨X⟩ - 2⟨X⟩⟨Y⟩ - ⟨Y⟩

Now by independence, we know that ⟨XY⟩ = ⟨X⟩⟨Y⟩ so we get the following after cancelling:

Var(X + Y) = ⟨X⟩ + ⟨Y⟩ - ⟨X⟩ - ⟨Y⟩ = Var(X) + Var(Y)

So variances have the following very convenient property that isn't true for mere second moments: The variances of independent variables add! If we want the variance of the sum of a bunch of independent random variables, we just need to add together the variances of each variable separately.

This is a very useful property for a statistic to have. Can we generalize it? Say we want to come up with a statistic that is to the third moment as the variance is to the second moment. Can we come up with some polynomial in the moments of

X that starts with

⟨X⟩ and that can be computed for a sum of independent variables by adding its values for each individual variable?

Since we know what we want the first term to be, we'll start with that and expand:

⟨(X+Y)⟩ = ⟨X⟩ + 3⟨XY⟩ + 3⟨XY⟩ + ⟨Y⟩

By independence:

⟨(X+Y)⟩ = ⟨X⟩ + 3⟨X⟩⟨Y⟩ + 3⟨X⟩⟨Y⟩ + ⟨Y⟩

The other expectations we're allowed to use are the linear and quadratic ones:

⟨(X+Y)⟩ = ⟨X⟩ + 2⟨X⟩⟨Y⟩ + ⟨Y⟩

⟨X+Y⟩ = ⟨X⟩ + ⟨Y⟩

Since we want to get something third degree (to cancel out all the third degree terms in

⟨(X+Y)⟩, pretty much our only option is to multiply together the first and second moments, or to cube the first moment. This covers all the partitions of 3:

3, 2 + 1, 1 + 1 + 1.

⟨X+Y⟩⟨(X+Y)⟩ = ⟨X⟩⟨X⟩ + 2⟨X⟩⟨Y⟩ + ⟨X⟩⟨Y⟩ + ⟨X⟩⟨Y⟩ + 2⟨X⟩⟨Y⟩ + ⟨Y⟩⟨Y⟩

We'll try subtracting 3 of this quantity to cancel out the middle terms:

⟨(X+Y)⟩ - 3⟨X+Y⟩⟨(X+Y)⟩ = ⟨X⟩ + ⟨Y⟩ - 3⟨X⟩⟨X⟩ - 6⟨X⟩⟨Y⟩ - 6⟨X⟩⟨Y⟩ - 3⟨Y⟩⟨Y⟩

We'll group the X-only terms and Y-only terms together. We're still left with some mixed terms, these can be cancelled by using the 1 + 1 + 1 partition of 3:

⟨(X+Y)⟩ - 3⟨X+Y⟩⟨(X+Y)⟩ = ⟨X⟩ - 3⟨X⟩⟨X⟩ + ⟨Y⟩ - 3⟨Y⟩⟨Y⟩ - 6⟨X⟩⟨Y⟩ - 6⟨X⟩⟨Y⟩

⟨(X+Y)⟩ - 3⟨X+Y⟩⟨(X+Y)⟩ + 2⟨X+Y⟩⟨X+Y⟩⟨X+Y⟩ = ⟨X⟩ - 3⟨X⟩⟨X⟩ + ⟨Y⟩ - 3⟨Y⟩⟨Y⟩ - 6⟨X⟩⟨Y⟩ - 6⟨X⟩⟨Y⟩ + 2⟨X⟩ + 6⟨X⟩⟨Y⟩ + 6⟨X⟩⟨Y⟩ + 2⟨X⟩

⟨(X+Y)⟩ - 3⟨X+Y⟩⟨(X+Y)⟩ + 2⟨X+Y⟩⟨X+Y⟩⟨X+Y⟩ = ⟨X⟩ - 3⟨X⟩⟨X⟩ + 2⟨X⟩ + ⟨Y⟩ - 3⟨Y⟩⟨Y⟩ + 2⟨X⟩

Say Z = X + Y. Then:

⟨Z⟩ - 3⟨Z⟩⟨Z⟩ + 2⟨Z⟩ = (⟨X⟩ - 3⟨X⟩⟨X⟩ + 2⟨X⟩) + (⟨Y⟩ - 3⟨Y⟩⟨Y⟩ + 2⟨Y⟩)

This is exactly the property we wanted. We'll call this the third cumulant, and the variance is the second cumulant. The first cumulant is just the same as the first moment, it's the mean. Here's our notation for the cumulants:

⟨X⟩ = ⟨X⟩ - 3⟨X⟩⟨X⟩ + 2⟨X⟩

As you might guess, this can be continued indefinitely. To find the

nth cumulant, you could keep solving as we were before. Just start with

⟨(X+Y)⟩ and keep subtracting or adding terms corresponding to partitions of

n to cancel out terms that mix moments of

X and

Y until you're left with only unmixed terms.

The cumulant generating function

But there is also a more systematic way, or at least more elegant. We simply observe that:

What? We can check for the first few terms that this does indeed do the right thing (note that m(0) = 1, no matter what the distribution is):

log m(t) = - = m''(t) - m'(t)

log m(t) = - 3 + 2 = m'''(t) - 3m'(t)m''(t) + 2m'(t)

log m(t) = - 4 - 3 + 12 - 6 = m''''(t) - 4m'(t)m'''(t) - 3m''(t) + 12m'(t)m''(t) - 6m'(t)

This last line suggests that the fourth cumulant should be:

⟨X⟩ = ⟨X⟩ - 4⟨X⟩⟨X⟩ - 3⟨X⟩ + 12⟨X⟩⟨X⟩ - 6⟨X⟩

This is indeed correct. We can see why in general this formula should give cumulants that add for independent variables. First note:

So, suppose as above that X and Y are independent random variables. Then:

By independence, this equals:

log (⟨e⟩⟨e⟩) = log ⟨e⟩ + log ⟨e⟩

So the entire function

log ⟨e⟩ adds for independent variables. (This function is called the cumulant generating function.) Since the derivatives of this function are the cumulants, they add too.

Moments and Gaussian integrals

Consider an integral like:

where P(x) is some polynomial. To compute it, we can just break down the polynomial into terms, and then for each term we're left with an integral of the form:

Right away, we can see that if

n is odd, then the integral will be 0. But that's skipping ahead a bit. Let's instead treat the integral as an expectation over a standard normal probability distribution. We have to keep in mind that the normalizing factor for such a distribution is

, but other than that, we can just use cumulants to solve the problem. So let's say this is the integral we're trying to solve, we can observe that it's just a moment of the standard normal distribution:

The moment generating function for the normal distribution:

So we can see that the moment generating function is

m(t) = e. Computing its derivatives:

m'(t) = tm(t)

m''''(t) = (3 + 6t + t)m(t)

m'''''(t) = (15t + 10t + t)m(t)

m''''''(t) = (15 + 45t + 15t + t)m(t)

When we evaluate at 0, we get a sequence of moments that goes: 1, 0, 1, 0, 3, 0, 15...

It turns out this sequence is 0 at odd

n and equal to the product of all odd numbers less than

n at even

n. So the next term should be 0, and the one after that should be

3×5×7 = 105. But why is this so? Note that only the constant factor in front of

m(t) has any contribution to the value of the moment. Terms like

45tm(t) have no contribution because we compute moments at

t = 0.

Each time we apply a derivative, we can either pull down a new factor of t or differentiate an existing factor of t. To end up with a constant, we need to pull down the same number of factors as we differentiate. The full derivative is the sum over all possible choices of pulling down a new factor and differentiating an old one.

To make that intuition more rigorous and precise, we do something a little bit weird.

m''''(t) is the fourth derivative of the generating function. What we do is make each of those four derivatives unique, so we can better keep track of them. One way to do that is to have:

t = τ = τ = τ = τ. Then our derivatives are abbreviated as

d = . Since all these tau's are secretly the same number, these derivatives obey the following law:

d τ = 1 Now we can do the computation:

When applying the first derivative, we have no choice but to pull down a tau.

When applying the second derivative, we apply the product rule and get a sum of the derivatives of the left and right side of the product. Combinatorially, we can think of this as a choice, where we can either differentiate the exponential (which pulls down

τ), or the tau we've already pulled down (which yields

1).

Now we're dealing with

d. For the first term, there aren't any taus for us to differentiate, so the only option available is to pull down

τ. For the second term, we have a choice: we can differentiate

τ or

τ. (Note that this choice of which tau to differentiate translates to getting a factor of 2 when computing

(t)' = 2t.) We can also pull down a

τ into this term.

Now, the

τττ has too many taus in it to be reduced to a constant by our remaining supply of derivatives. Remember, this calculation is happening with all the taus equal to 0, and so as long as we're careful about the degree of each term as compared to the number of derivatives remaining to be applied, we can safely ignore such terms.

As we apply the final derivative, we can apply it to any of these terms. We could also pull down a

τ, but this would again result in a 0 term, which we can safely ignore. So overall, we get:

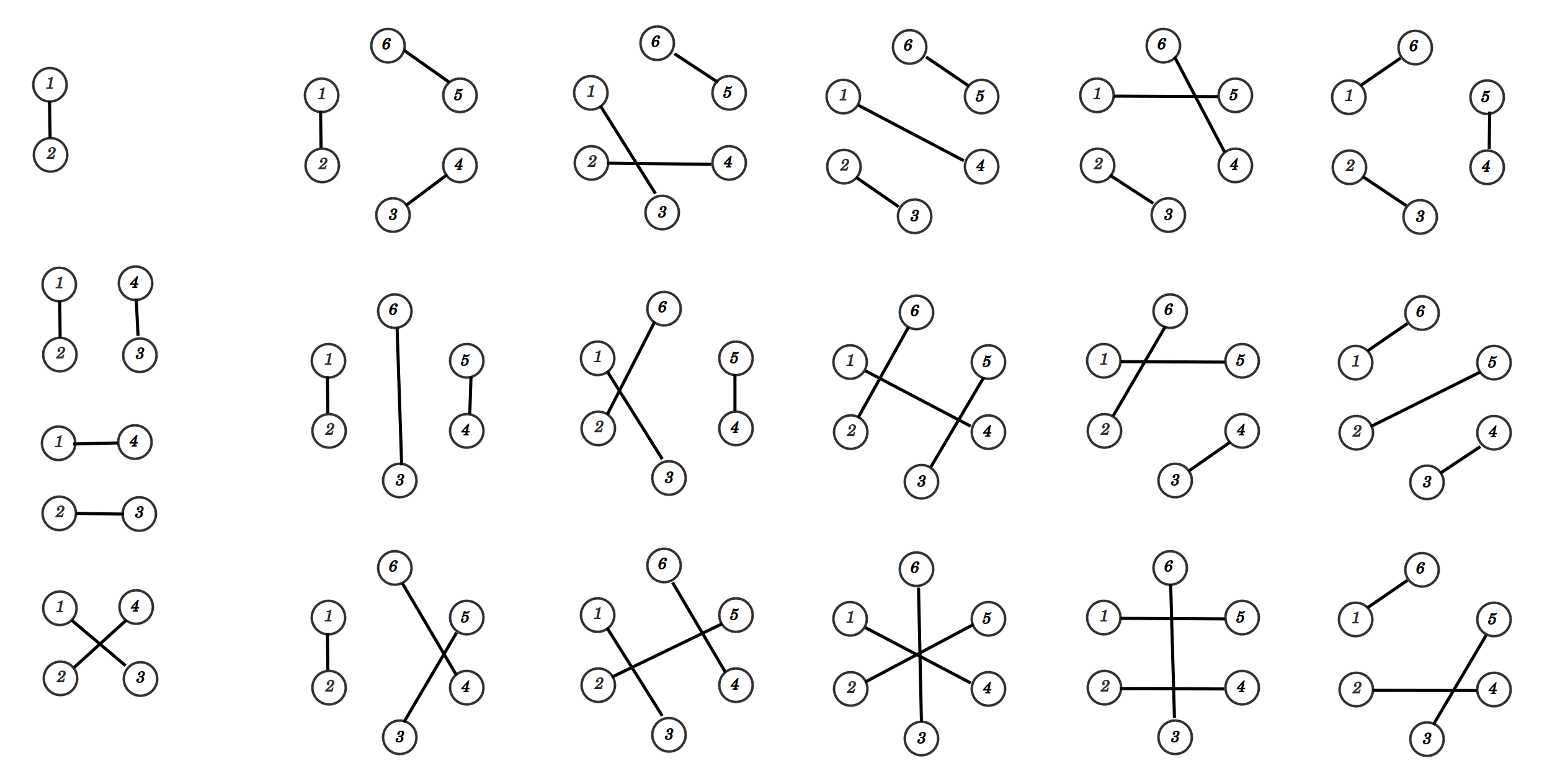

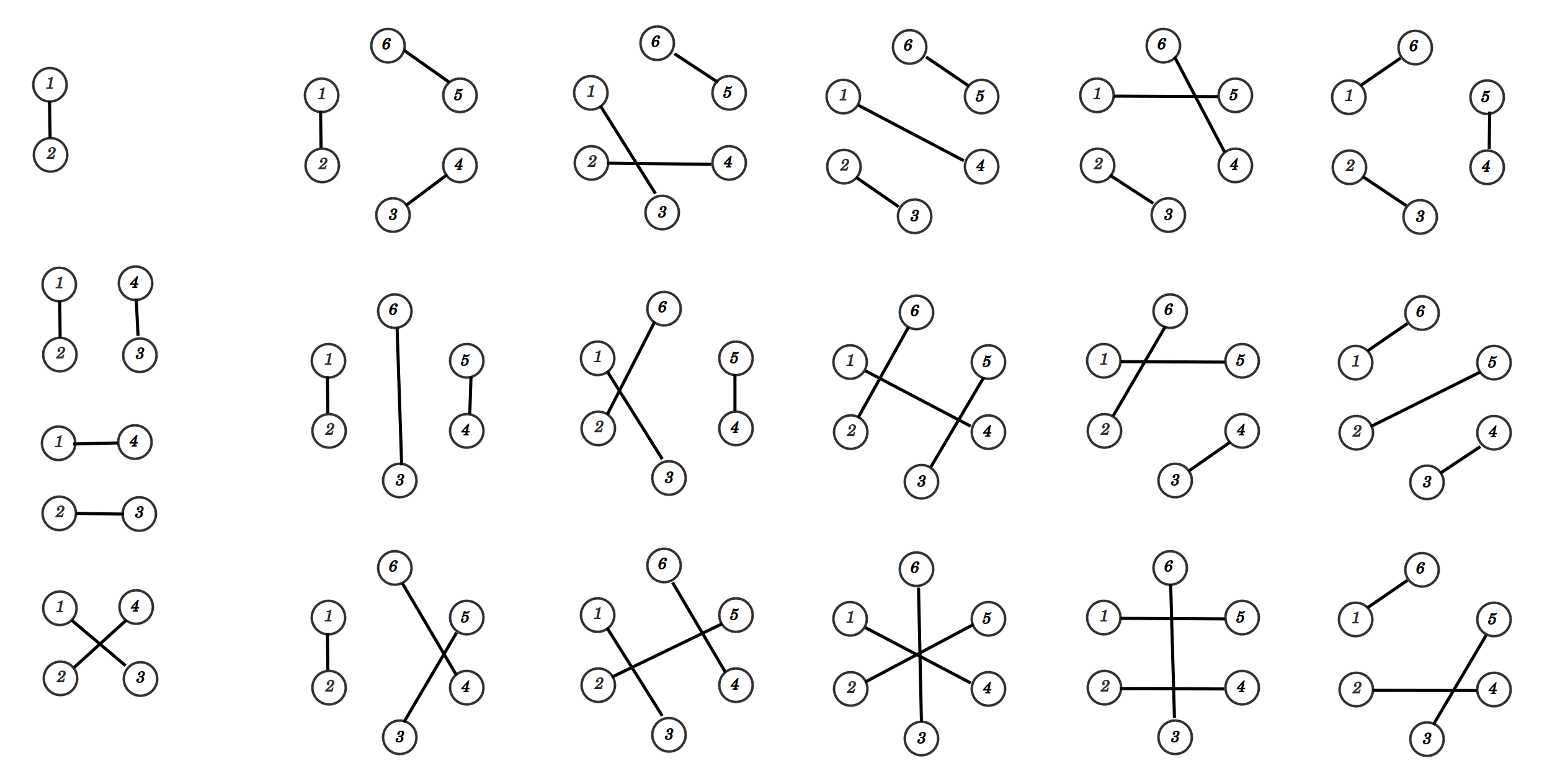

A similar calculation can be done for any moment, not just the fourth. If you think carefully about the process, you'll note that it looked basically like we were pairing derivatives. Whichever derivative in a pair came first in the sequence pulled down a tau, and then the other one differentiated that tau back to a 1. It turns out there are 3 ways to pair derivatives, corresponding to our final answer of 3. They are (with the corresponding sequence of terms):

(1,2), (3,4): 1 ⟶ τ ⟶ 1 ⟶ τ ⟶ 1

(1,3), (2,4): 1 ⟶ τ ⟶ ττ ⟶ τ ⟶ 1

(1,4), (2,3): 1 ⟶ τ ⟶ ττ ⟶ τ ⟶ 1

We can also make diagrams showing how things are paired up. Counting all the possible ways gives our answer for the moment:

The product of odd numbers comes from the fact that if we fix an order on the objects and always pick lowest unpaired object in that order to pair next, the number of options decreases by 2 each time. For example starting with six objects, there are 5 ways to pair off object 1. Then we're left with 4 objects, and there are 3 ways to pair off the first of those objects. Then we're left with 2 objects and there's only one way to pair those. Hence the 15 ways of pairing 6 objects.

This also makes it clear why the odd moments are 0. No matter how we try to pair up our derivatives, there will always be one left over. This insight about how Gaussian moments are given by counting the ways to pair up objects is known as

Isserlis's theorem or sometimes as Wick's theorem.

Gaussian integrals: the connection to cumulants

If we go back to the moment generating function for a Gaussian distribution, we see that it's very easy to compute the cumulant generating function as well:

By computing derivatives, it's easy to see that:

All cumulants higher than the second are zero! This provides a convenient algorithm to break up any moments higher than the second moment. Say we're given the fourth moment of our Gaussian:

Using the fact that all cumulants other than the second are 0:

⟨X⟩ = 0 = ⟨X⟩ - 4⟨X⟩⟨X⟩ - 3⟨X⟩ + 12⟨X⟩⟨X⟩ - 6⟨X⟩

⟨X⟩ = 4⟨X⟩⟨X⟩ + 3⟨X⟩ - 12⟨X⟩⟨X⟩ + 6⟨X⟩

Observe that we again obtained the expected answer of 3.

Arbitrarily high moments can be broken down into second moments in this way. This gives another intuition for why pairings show up in Isserlis's theorem: each pair corresponds to a second moment

⟨XX⟩, where all the

X are secretly the same random variable. (Isserlis's theorem actually knows about multi-dimensional Gaussians too, so in this more general setting these are allowed to be different.) This is a very special property of Gaussian distributions.